Security and privacy are often seen as opposite, irreconcilable goals;

as a zero-sum game. Because the stakes involved are high, the debate

is often heated and emotional. Privacy advocates and security hawks

cling to rigid viewpoints, fighting each other in an aging war of

trenches. As a result, measures to increase our security scorn our

privacy. And privacy-enhancing technologies do very little to address

legitimate security concerns. This is bad, both for our privacy and

our security, and for society at large: “It

is highly unlikely that either extreme—total surveillance or total

privacy—is good for our society.”. But are privacy and security

really a zero-sum game?

Security and privacy are often seen as opposite, irreconcilable goals;

as a zero-sum game. Because the stakes involved are high, the debate

is often heated and emotional. Privacy advocates and security hawks

cling to rigid viewpoints, fighting each other in an aging war of

trenches. As a result, measures to increase our security scorn our

privacy. And privacy-enhancing technologies do very little to address

legitimate security concerns. This is bad, both for our privacy and

our security, and for society at large: “It

is highly unlikely that either extreme—total surveillance or total

privacy—is good for our society.”. But are privacy and security

really a zero-sum game?

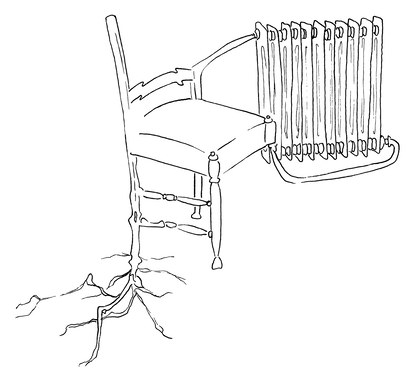

(This is the seventh myth discussed in my book Privacy Is Hard and Seven Other Myths. Achieving Privacy through Careful Design, that will appear October 5, 2021 at MIT Press. The image is courtesy of Gea Smidt.)

In fact it is not, and the gap between security and privacy goals can be often be bridged with a little bit of effort.

A famous example is the invention of untraceable digital cash by David Chaum. Traditional cash, and especially coins, are untraceable: looking in someone’s wallet will not allow you to tell where they got their money from, nor can the supermarket tell at the end of the day who its customers were and what each of them bought. Digital payment schemes on the other hand are typically traceable because they are account based: credit card numbers, for example, allow a payment to be traced to the card holder. This traceability in essence ensures that any money cannot be spent twice: once it leaves the account it is no longer under control of the user.

A natural idea to avoid account based schemes is to mimic traditional cash using digital equivalents. Such digital coins need to satisfy three properties: they need to be unforgeable, untraceable, and double spending needs to be prevented: unlike physical coins, digital coins are trivial to duplicate. A natural way to make coins unforgeable is to let the issuer sign them using a digital signature. Such signatures also make the coin unique, so the bank can check for double spending. Unfortunately this also means coins are traceable. This apparent paradox was resolved by David Chaum through his invention of blind signatures. Such signatures allow a bank to sign a coin, without knowing (and later recognising) which coin it actually signed. This way, issued coins cannot be linked to coins that are spent later, thus guaranteeing untraceability. This is the general idea: the details are described in the book.

Chaum’s ideas have been generalised to a concept called revocable privacy. Revocable privacy is a design principle for building systems that balance security and privacy needs. The underlying principle is ‘privacy unless’. This means that the system must be designed to guarantee (in technical terms) the privacy of its users unless a user violates a predefined rule. In that case, (personal) information will be released to authorized parties. Several techniques exist to implement revocable privacy. One technique, also invented by David Chaum, allows one to embed the identity of the owner of a coin inside it in such a way that it can only be recovered if it is spent more than once (i.e. if the owner breaks the double spending rule).

Another technique, called distributed encryption, allows us to solve other scenarios, like that of the ‘canvas cutters’. These criminal gangs roam the highways looking for trucks with valuable cargo. They would be easy to spot if you would scan the license plates of every car entering car parks along a stretch of highway: the license plates of the cars driven by the canvas cutters would be seen to visit many of them. However, as a sizeable by-catch (is that really an English word?) you would record the license plates of all passers-by that happen to stop at one of the car parks. Clearly undesirable from a privacy point of view. Using distribute encryption prevents this. Using this technique, license plates are encrypted the moment they are scanned, and the corresponding ciphertext share is stored. At the end of the day, these ciphertext shares can only be decrypted if enough shares for the same license plate scanned at different car parks were collected. This threshold is fixed and cannot be changed after the fact. By choosing a sufficiently high threshold (say 2 or 3 in this case) privacy of innocent passers-by is guaranteed, while the canvas cutters will not be able to evade detection.

Apple’s recently announced system for scanning images for child porn (CSAM) before they are uploaded to the cloud also fits the revocable privacy paradigm: Apple is notified only after more than 30 images are flagged as potential CSAM (and the authorities are notified after a review by Apple). But it is important to note that the technical guarantees are less strict: what constitutes CSAM is not fixed and not strictly embedded in a technical rules. The database of hashed used for this purpose is easily extended to include other pictures deemed illegal.

Moreover, the heated debate that ensued after Apple’s announcement also reminds us that any technical proposals that claim to protect privacy should be analysed in a broader context. Even though matches are only reported above a certain threshold, an initial analysis still takes place and may make people feel they are watched and surveilled regardless.

(For all other posts related to my book see here)