Summary of Day #3 of the Interdisciplinary Summerschool on Privacy

Please find below a summary of the lectures given on day #2 of the Interdisciplinary Summerschool on Privacy (ISP 2016), held at Berg en Dal this week. There were lectures by Seda Gürses on privacy research paradigms and privacy engineering and Jo Pierson on platforms, privacy and dis/empowerment by design.

Seda Gürses: Privacy Research Paradigms, Privacy Engineering and SaaS

Seda started with the observation that companies are more interested in addressing privacy in a technical way now, while until recently they were only addressing privacy in a legal way.

But that raises the following questions: how do you make privacy engineering part of your system development processes, how do you bring results from privacy research to the software engineering practice. In order to answer these questions, we need to understand how software engineering is done in practice. In other words: the practice of software production is an important element of privacy research.

Three major shifts in software engineering are relevant:

- from shrink wrapped software → services,

- from waterfall model → agile programming, and

- from PC → cloud (she did not discuss this much)

The question is: what is the impact on computer science research in privacy?

Privacy engineering

We can distinguish three privacy research paradigms:

- Privacy as confidentiality: related to the concept of "the right to be let alone" (Warren and Brandeis). The main design principles in this field are: data minimization (using computational methods to collect less), provide properties with mathematical guarantees, avoid a single point of failure (or distribute trust, see George's talk yesterday), and make the solution open source (security is a process, it takes a village to keep a system secure). Example applications built using this paradigm are secure messaging (like Signal), or anonymous communication (like Tor).

- Privacy as control: related to the concept of informational self-determination as formulated by Westin. This is in line with data protection legislation or the Fair Information Processing Principles (FIPPs). The main design design principles in this field are: transparancy and accountability, as well as implementing data subject access rights. Example technologies in this paradigm are privacy policy languages, purpose based access control. In this paradigm the service provider itself is implementing the privacy protection technologies, whereas in the first paradigm the data subjects themselves "implement" this protection (in the sense that they procure the tools or systems themselves). The service provider is not really seen as the adversary in this paradigm (which may be problematic...).

- Privacy as practice: related to the concept of freedom to construct one's own identity without unreasonable constraints (Agre and Rotenberg). The focus in this paradigm is how social relations are disrupted. This paradigm has a more social understanding of privacy. Privacy cannot be protected by technology, because "privacy is not in the machine". Example research in this paradigm is about how to give feedback to users, and how to make them aware of the potential impact of certain disclosures (cf. privacy nudges). This includes tools to make the consequences of algorithmic (machine learning) decisions and big data systems visible to the people concerned; to make the working of the machine learning algorithm transparent.

These communities have not been collaborating a lot. That is why Seda set up (together with others) a workshop on privacy engineering. Privacy engineering is:

the field of research and practice that designs, implements, adapts and evaluates theories, methods, techniques, and tools to systematically capture and address privacy issues when developing socio-technical systems.

This definition references two important concepts that warrant further explanation:

- Privacy theory: methods (approaches for systematically capturing and addressing privacy issues during information system development, management, and maintenance), techniques (procedures, possibly with a prescribed language or notation, to accomplish privacy-engineering tasks or activities) and tools (automated means that support privacy engineers during part of a privacy engineering process).

- socio-technical systems: these encompass standalone privacy technology (like Tor), privacy enhancements of systems or functions (like privacy policy languages), and platforms that help to evaluate possible privacy violations

Future research needs: empirical studies (how are privacy issues being addressed in engineering contexts), machine learning and engineering (methods, tools and techniques to address privacy and fairness), and frameworks and metrics (for evaluating effectiveness of these approaches).

The move to agile software development and services

In the nineties we had shrink wrap software: you bought software in a box. Now software is a service (SaaS): you download it, or connect to it as a web service. This all started with Jeff Bezos (CEO Amazon) in 2001/2002 sending out an instruction to all developers in the company to develop all software as a service and provide interfaces to them so other developers within Amazon could make use of this service too.

The main characteristics

- Shrink wrap software: the software binary runs client side, user has control, updates are hard, software paid in advance

- Services: thin client (the software runs at the server mostly), data "secured" by the service, collaborative, easy updates server side, pay as you use.

Implications of this shift (for privacy): there are transactions throughout the period you are using the service. Small websites providing a service (say a ticket office of a local theater) make use of many large services (service pooling) to create the service. This leads to pooling of data and intensified tracking of users. For example FullStory allows you to see everything your users are doing on your service. Users think they are talking to one service, but in fact are talking to many services (that are often part of a great many other services).

Traditionally, 60% of software development cost is maintenance. Of that, 60% is the cost of adding functionality. The agile manifesto was the result of a bottom up movement of software developers to counter this (revolting against those damn managers that don't know how to do software). The essence is to keep customers in the loop all the time, and to push out updates as often as possible. Quality assurance in services is constantly monitoring user behaviour. Often multiple analytics services are running in parallel (for example because developers forgot to turn the old system off when migrating to the new one).

Agile software and service development creates further privacy consequences. By its very nature, in agile development you are looking how users are interacting with the system all the time: development is very data (and user) centric. Rapid feature development: every feature is tweaked all the time (based on user behaviour). This also leads to feature inflation: a service accrues capabilities over time so that a basic service over time morphes into something else, something more invasive over time. For example, an authentication service (especially one that is shared as a basic component by many other user-facing services) can profile a user, and e.g. detect fraud.

Interestingly enough there are two competing models of privacy (Agre): the surveillance model and the capture model. In the capture model

.. systems capture knowledge of people’s behavior, and they reconfigure them through rapid development of features that are able to identify, sequence, reorder and transform human activities. This also means that they open these human activities to evaluation in terms of economic efficiency.

This is exactly what agile development does!

Code from agile development is immature and has more vulnerabilities. Interestingly though, this does not lead to more incidents because attackers need some time to find the vulnerabilities in the code. If you release new versions very quickly, the attackers essentially have to start from scratch and will not have found a useful vulnerability before the new release is published.

In summary: what does this all mean for privacy? Privacy as control fits agile development better than privacy as confidentiality (because the latter may not be able to provide the required guarantees with every update, and in any case the agile framework requires a lot of user data in the first place). For privacy as practice, the user centric development aspect of agile development actually fits this paradigm quite well. For example, agile development allows you to detect whether users hate a certain feature and to quickly change or even remove it.

Jo Pierson: Platforms, privacy and dis/empowerment by design

Jo started with an example. In the UK child labour was common in the 19th century, for example in the cotton industry. What was one of the arguments to keep child labour? The cotton mills were built for workers the size of children: abolishing it would require manufacturers to rebuild their mills! The technical imperatives require child labour. The wrong value was embedded into the technology.

Media and communications studies (sociology, psychology, technology, humanities) investigate human and social communication on different levels (society wide, in organisations). It tries to answer questions like: "What happens when communication becomes mediated?" It recognises that people can become both empowered as well as disempowered by social-technical systems (this term understands technical systems to be 'with' people instead of 'about' people). This depends on the interplay between technology (artefact), people (practices) and society (societal arrangements).

For example, mp4 was a compression technology invented to facilitate distribution of large media files over low bandwidth networks. It got used also in a peer-to-peer context for the illegal distribution of copyrighted material.

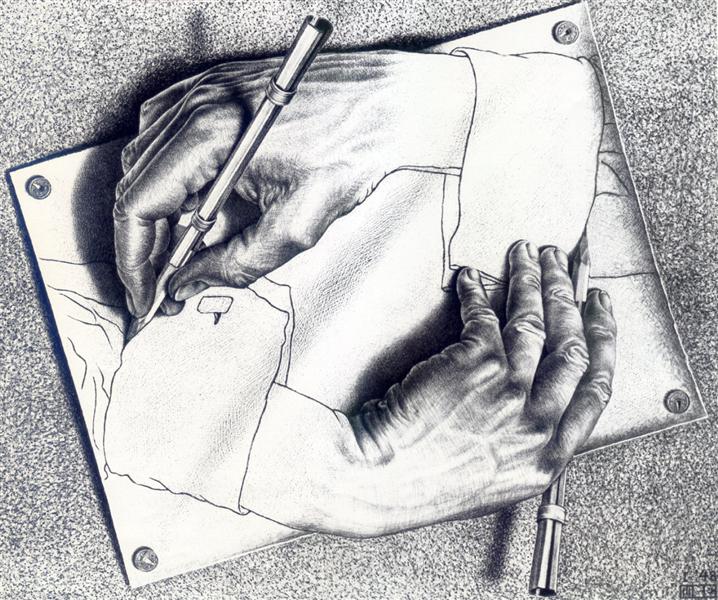

Jo uses this

drawing of Escher to illustrate that society and technology

mutually shape each other (similarly for design and use).

Jo uses this

drawing of Escher to illustrate that society and technology

mutually shape each other (similarly for design and use).

Traditional mass media was seen as curated (radio/TV broadcasting). On

the other side there was interpersonal media as facilitator (telecom).

Government was typically not allowed to intervene in these

communications. New mass self-communication systems (online platforms

like social media) fold the role of the curator and facilitator;

although they often downplay their role of curator. There is strong

interest both from industry and policy to 'shape' these online

platforms.

Jo pointed out the "the politics of platforms"

(Gillespie): platforms position themselves carefully in different ways

to different groups: users, advertisers, policy makers, making

strategic claims about what they do and not do, and framing carefully

how they should be 'understood'. The become the curators of public

discourse. Example: Airbnb putting

out these advertisements in San Fransisco in response to them

(finally!) having to pay taxes.

Jo pointed out the "the politics of platforms"

(Gillespie): platforms position themselves carefully in different ways

to different groups: users, advertisers, policy makers, making

strategic claims about what they do and not do, and framing carefully

how they should be 'understood'. The become the curators of public

discourse. Example: Airbnb putting

out these advertisements in San Fransisco in response to them

(finally!) having to pay taxes.

The construction of such platforms has lead to the following developments: datafication, commodification, selection, and connection (as explained below).

Datafication: platforms allow user actions to be translated into data that can automatically be processed and analysed. For example, open data is collected in an open way (input) but the results of the processing (output) or sold commercially. Health apps for example are part of larger ecosystems (sharing the data) and use double-edged logic as a bait for maximum data input

Commodification: platforms transform objects that have an intrinsic value (having a friend, having a place to stay) by monetizing them, giving them economical value.

Selection: the fact that you do something will influence what you will see later; platforms adapt to user actions. You see a shift of expert-based driven communication to user-driven communication. This also influences on-line publishing. For on line news items, the topics are now selected based on what people find popular and what is trending, instead of what journalists themselves find important.

Connection: the meaning of what being connected is has changed, from human connectedness to automated connectivity.

Example of disempowerment: Siri in Russia refusing to answer questions about the location of gay clubs or gay marriage.

Another example of disempowerment: what changed with the new (2014) Facebook data policy? This was investigated by iMinds in a research project for the Belgian DPA. Their main findings

- Horizontal and vertical expansion of the data ecosystem (because all apps got connected together)

- Unfair contract terms, not in agreement with consumer law.

- Further use of user-generated content.

- Privacy settings and terms of use, and the tracking through social plugins.

Often, some innocuous bits of data allow one to infer information about more sensitive data. The USEMP project created a tool called DataBait to give people insights in such data inferences. When given access to e.g. your Facebook page it tells you what Facebook can derive about you.

The EMSOC project did empirical research into how young people experience privacy on social media (much more refined and nuanced than the simplistic studies published by companies like Pew Research lead us to believe). Results: Facebook is omnipresent so people are very reluctant to leave, and deleting specific items is a ridiculous amount of work. Young people are aware of the privacy risk associated with using Facebook, but they are fatalistic about their options. There is a "tradeoff fallacy": in general people are resigning, fatalistic: they come to accept that they have little control over what marketeers can learn about them. Strikingly, the more data-literate people are, the more data they are actually sharing! Similarly, advertisement is seen as a necessary evil. Younger people only view this as a problem when they learn that their own pictures are being used in advertisements to their peers ("I don't want to be a nuisance towards my friends!").

Outlook: we can create more privacy enhancing technologies (technology), we can improve governance, regulation and enforcement, like the GDPR (society) and creating user awareness and create collective action (people). How can you empower people without burdening them with the individual responsibility for protecting their privacy: this should stay a shared, societal, responsibility.