Summary of presentations and discussions of day one of the For Your Eyes Only conference held in Brussels on November 29 and November 30. My main findings can be found here.

Panel #1 - Minors and Social Media: Between Protection and Empowerment

Panel: Alice E. Marwick (Fordham University's Department of Communication and Media Studies), Simone van der Hof (Leiden University), Tammy Schellens (Ghent University, department of educational studies), Eleni Kosta (University of Tilburg), Eva Lievens (Interdisciplinary Centre for Law & ICT, KU Leuven).

Marwick, together with boyd, aims to formulate a theory of networked privacy. This theory aims to formulate shared social norms about information sharing. Previous research shows that teens and adults have similar attitudes towards privacy. Yet teens use social networks as an essential part of their lives. Therefore, privacy is a constant explicit choice for them. Teens see the benefit of sharing, and need social media to participate in their peer groups. Unfortunately they have to use a medium that is more publicly visible. For them the old (adult) push model of explicitly communicating information with certain people does not work. They want the pull model where they leave the information once in a place where they know their peers will find it. Teens use structural strategies and social strategies to protect their privacy. Structural strategies include using different media for different things and contexts. Social strategies include 'steganography': group-specific phrases that you can't understand without the necessary context or background. Teens also respect social norms that depend on the social media context. It is, for example, very impolite to comment on old pictures (and you will be defriended if you do).

Schellens presented observational studies (which she said were more reliable than surveys or interviews) of teen behaviour on-line: what information do they put online, which privacy settings do they use, and which risks does this bring. For example, teens restrict contact information to friends only most of the time (90%), but compromising (risky) content is still shared even with friends-of-friends in 33% of the cases. She then claimed that the older the teens were, the more risky the content was they put online. But this is of course obvious: 8 year olds hardly drink, smoke or have sex, whereas 16 year olds may. She showed that education helps improve awareness, but has no influence on behaviour (perhaps because of lack of alternatives and/or peer pressure).

Van der Hof discussed the new EU regulation and child privacy. The regulation has different methods of consent for children over and under 13 years old (this age limit seems to originate from US standards). This means increased cost for business (because they have to maintain separate processes). Moreover, a reliable (and cross border) way to verify age online is still missing. The right to be forgotten is problematic: because information is shared with many partners, a request for deletion should be automated. Moreover, the "physics of the net" make deletion practical impossible. Finally, the household exception considerably limits success. Finally: transparency requirements are not going to help children very much as they will not understand what you are talking about when they are quite young.

Kosta served as discussant. She observed that we (older generation) have a view on privacy and expect them (teens) to behave the same. This is problematic as teens focus on convenience. Moreover, it is often hypocritical, as parents often give their children little privacy. Age verification needs to be cross border, as services have international audience. The 13 year age limit is derived from the US COPA. There appear to be initiatives to move the age limit to 18 years. There is a moral issue: there are game sites whose sole purpose is to profile the children using their services. Because age verification is easily bypassed on social networks, many children often do (even with help from their parents). This means that these networks cannot adequately address information specifically for these kids. The panel felt self-regulation with respect to profiling and privacy of social media is not a solution. The age at which children are first using social media is decreasing. Yet most research is aimed at children over 13 years of age.

Panel #2 - Living Apart Together? The Propertisation of Personal Data, The Right to Privacy and the Protection of Personal Data

Panel: James B. Rule (UC Berkeley School of Law), Nadezhda Purtova (University of Groningen), Sören Preibusch (University of Cambridge), Orla Lynskey (London School of Economics), Mathias Vermeulen (European University Institute (EUI) in Florence, Italy and Research Group on Law, Science, Technology & Society at the Vrije Universiteit Brussel), Serge Gutwirth (VUB-Research group Law Science Technology & Society).

This panel explores how property rights can empower the users of social media, compared to conventional protection measures (based on more fundamental human rights).

Rule believes carefully crafted property right are good for privacy, for three reasons: it does not prohibit other legitimate public uses, it would make opt-in the default and it create a climate (by the new incentives) to grow grassroot organisations that care for your privacy and will create tools for protection. (This was really the essence of what he had to say during a quite longwinded but eloquent monologue.)

Purtova observes that the arguments for or against privacy as a property right are the same in the EU or the US. However, she notices differences in background. In the US propertisation would be a step forward, providing some level of general protection. In the EU we already have distinct privacy rights (both at national and at the ECHR level). Moreover, certain aspects of these privacy rights cannot be waived. In the US this still needs to be debated. Therefore, in the EU the question is: is a property based right to privacy better, perhaps in response to new technological developments?

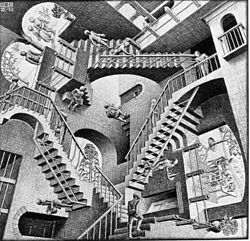

Escher

- Het Trappenhuis - as illustration of the flow of information.

Escher

- Het Trappenhuis - as illustration of the flow of information.

Property rights may have the following benefits. Because of the "Erga Omnes" effect, the distinction between data controller and data processor disappears. And because of "Divisability", the owner of some piece of the cake does not get to have the whole cake: by giving away some rights, you do not giver away all rights. She also introduced the quite cute abbreviation R2B4go10 for the right to be forgotten.

Lynskey argues that property rights seem intuitively right. Users understand it both as a moral and an economic right. However, property rights are a US solution to a US problem. Current EU system already embodies the idea of informational self determination. In the US privacy rights are sector specific, and some data falls in between the gaps. Moreover, property hasn't been a very successful right in EU courts. Enforcement will remain an issue (whether property or human rights) and compliance cost may be high. Also, regulation will still be needed, e.g. to deal with market failures. She observes that the difference between data protection and the fundamental right to privacy is that data protection also gives users control over their data, for example the right to data portability in the new regulation. Closing she said: "We have had the carrot for long enough, we now need the stick!" Amen.

Preibusch observes that we pay not only with money but also with personal data (when buying a service). In return we get back personalisation. Attitude towards privacy of companies differs. Some online shops differentiate on data collection. However, free services don't do that: they all collect similar data. Monopolies collect more personal data (similar to asking more money for the same service). In social networks features trump privacy. Form the user perspective on privacy he notes that when free, users choose for the privacy friendly option. But if they have to pay one dollar for it then it depends on the data item revealed (e.g. mobile phone number is worth one dollar to most, but not their name). Preibusch also notes that users typically reveal a lot of information voluntarily, but that when forced to fill in mandatory fields, they leave the optional ones empty. So services should not be too pushy: this will actually give them less information.

Gutwirth served as discussant. Compared to goods, information ubiquitous (transferable without loss), can be used simultaneously, doesn't wear off, can't be destroyed, and can be stolen (but that doesn't take it away). Therefore, in law, information is always treated special. He notes that in the EU we talk of Intellectual Rights whereas the US talks about Intellectual Property Rights. Note you get copyright on the form of the book, but not on the information contained in the book. The basic rule is the free access to information, which is only restricted for particular reasons (eg protecting inventions; copyright). Data protection is an extension of this idea. Pleas for propertisation of personal data disregards the public value of information (e.g. transparency, necessary for democratic society). Gutwirth closed with the following remark (paraphrased) "What kind of world would we live in if I can ask money for the fact that you use the fact that I have blue eyes..."

Panel #3 - Are There Alternatives to the Consensual Exploitation on Social Media?

Panel: Andrew McStay (Bangor University), Vincent Toubiana (Bell Labs), Claudia Diaz (COSIC/ESAT KU Leuven), Rob Heyman (iMinds-SMIT Vrije Universiteit Brussel), Ike Picone (iMinds-SMIT Vrije Universiteit Brussel), Jo Pierson (iMinds-SMIT Vrije Universiteit Brussel).

Toubiana discussed alternative business models with which Facebook can earn money. For example, Facebook increasingly becomes a global identity provider on the Internet. This can be extended to become a payment provider using the payment platform they already have for their facebook apps. They could offer subscriptions for premium services (like LinkedIn does). Social networks could ask money to follow others, like pheed.com does. This is only really useful if you have a lot of celebrities that people want to follow. Technical solutions to the ownership problem (as he called it) are: building distributed (P2P) social networks, use obfuscation (i.e. pollute data with noise, although Diaz later commented that this has been proven to be very hard at best), or privacy friendly widgets (eg replacing the like button with one that does not track you if you do not click it). He closed by noting that users are looking for simple solutions

McStay made clear he did not oppose to advertising per se, but does oppose the heavy profiling that goes on behind the scenes. This is nothing new however: back in the 1930's we already had the information society 1.0 and coupon returns were meticulously analysed to see what works. He threw in a few philosophers (Kant and Spinoza among others) to argue that the observer deeply affects the observed system. Hence in advertising, the audience is not static: "Advertising is better characterised in terms of becoming rather than being" (which was the basis for the titel of his talk "From Being To Becoming", for which he apologised...)

Rob Heyman started his presentation with a funny ad he got, offering him to become a Brussels gigolo... He never found out why he got that particular ad. He discussed the notion of affordance and perceived affordance (from The Design of Everyday Things) and the conscious way Facebook designs forms such that you will not set your privacy options too strictly. For example, instead of giving the choice to install a game or not, now users have only one option: to play the game. He closed by noting that Facebook doesn't sell the audience (they could only do that once) but they sell their attention.

Diaz discussed two distinct threats: the intrusiveness of advertisers, and the mass collection and analysis of personal data. They have distinct solutions: concealment and obfuscation (the latter does not prevent the collection of the information and may lead to incorrect profiles on which (wrong) decisions are made). She notes several limitations of Privacy Enhancing Technologies (PETs): they are developed with limited resources (there are little incentives to invest in privacy), and the API's (of e.g. facebook) may be blocked for the use of the tool you developed. Because the network effect (a social network is useful when most of your friends are on it) it is hard to make people switch. Therefore, it is hard to actually deploy privacy friendly social networks and make them a success.

Pierson served as discussant. He observes that we need to look at advertisement in a new way. Newspapers are a two sided market: both advertisers and subscribers pay: is there a possible relation with social networks?

Colofon

These notes are highly personal, and based on quick notes I took during the sessions. I may have totally misunderstood stuff, or left out very important stuff. If so, I apologise.

[…] week I attended the For Your Eyes Only conference held in Brussels on November 29 and November 30. These are my, personal, main […]

[…] presentations and discussions of day two of the For Your Eyes Only conference held in Brussels at November 29 and November 30. My main findings can be found […]